Recently I found myself in a situation, where a side project is producing a lot of costs for AWS SQS. After digging with the team, we discovered that we had configured short polling for 150+ queues.

After some more research, we realized that we can save some costs by switching to long polling. Still, we needed to determine if this would impact our product and, more importantly, our customers.

I am writing down the differences between SQS short and long polling options to save you the same round tripe.

Let's first take a look at SQS and the available queue types.

What is Amazan SQS?

SQS or Simple Queueing Services is a managed queue hosted and operated by AWS. Interestingly, it was the first service offered by AWS. Consumers of SQS can poll messages from the queue's server and process them. SQS supports two queue types: standard queues and FIFO queues. Expect the FIFO queue's message groups; a queue can only have one consumer at a time.

I think the main benefits of using managed servers are at hand:

Availability

Reliability

Scalability

and so forth.

Message queues enabled the first asynchronous applications to get started with processing events in this manner, e.g., event-driven and serverless use cases. They are a great tool for implementing asynchronous architectures in an application.

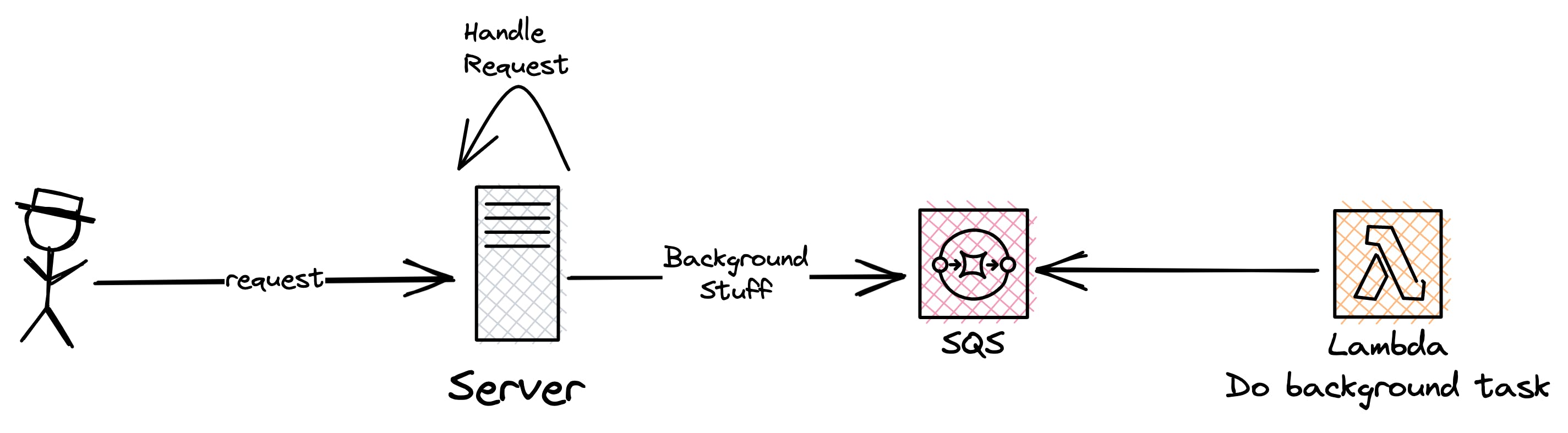

A simple use case could look like this:

A user sends a request to your application, and one task that should be processed within the requests is computationally intensive. You want a fast response for your customer, and the task's result does not have to be included in the response. So, your server sends a message to the queue, where you have a lambda function in place that polls messages from the queue. Once a message arrives, the lambda reads its content and processes the task.

You are happy because you build a cool asynchronous application, your customer is satisfied with the fast response time of your service and AWS is happy that you use their services.

Standard Queue

Standard queues are the default queues for SQS. They offer almost unlimited requests of all sorts (e.g., Write, Receive and Delete). They support at least one message delivery meaning that the same message may be delivered multiple times to the consumer. This is highly occasionally, but it can still occur. An important note for standard queues is that they do not guarantee message ordering. In general, the queue tries a best-effort approach to preserve the order of messages but does not guarantee it.

A standard queue is a good fit if you don't care about message order and your system is resilient to process the same message multiple times.

FIFO Queue

On applications where the order of the messages is crucial, you should rely on FIFO Queues. First-In-First-Out (FIFO) queues guarantee the delivery of your messages in the order the queue receives them.

There are a couple of use cases for this type of queue. Imagine an e-commerce application, where SQS handles your order management. In this case, you want to process messages in the order they arrived. Otherwise, you may oversell an Item or an order that was placed last might get the last available article. Your customers sure would not be happy about that inconvenience.

In addition to the ordering of messages, FIFO queues also guarantee a one-time delivery. This means that your system does not need to take care of the idempotency of arriving messages, as you can be sure that the message won't be duplicated if you send it to the queue within the 5-minute deduplication interval.

There are many more features and other small tweaks that SQS offers so that you can configure for your specific architecture, but to get this all on paper I would have to write a book. If you want a deeper dive into SQS check out AWS Fundamentals.

We are finally going to come to the main topic for the day: Polling messages from SQS 🎉

Why polling?

So the first question is, why do we need to poll messages from a queue? This gets a little bit deeper into the reason why the concept of message queues was created in the first place.

The main Idea behind the polling is that queues were created to store messages for a given time until a consumer could collect and process them.

Compared to a different event-driven approach, e.g., Event buses, the messages will remain in the queue until a successful message processing has occurred. A bus on the other hand will send out the event and does not necessarily care about the successful processing of the message (This does not apply to all EventBuses, AWS EventBridge, for example, lets you configure retries and dead letter queues).

A high throughput system may be overwhelmed by using an event bus. The queue on the other hand will store the messages and persist them until an application thread is ready to process them.

Polling furthermore allows making messages invisible. By polling, the message will be hidden from all other application threads, thus, making the concurrent processing of a message impossible. This comes in handy when you think about a multi-threaded server.

To conclude, polling allows storing messages until our system is ready to process them. Additionally, it guarantees that multiple application threads do not process messages at the same time, and only one consumer can poll messages from the queue.

Short polling for an SQS Queue

It is the default polling approach for SQS, if not configured otherwise. The sample rate enables a very quick (we talk about within tens or lower hundreds of milliseconds) receive of messages. In short (hehe) SQS will only return a subset of your messages while short polling. This happens by sampling a subset of its servers (based on a weighted random distribution). Only messages from the sampled servers will be returned. Keep in mind, even though SQS seems to be a single service, in its nature as a distributed service, there are a lot of servers involved to store your messages on AWS's back.

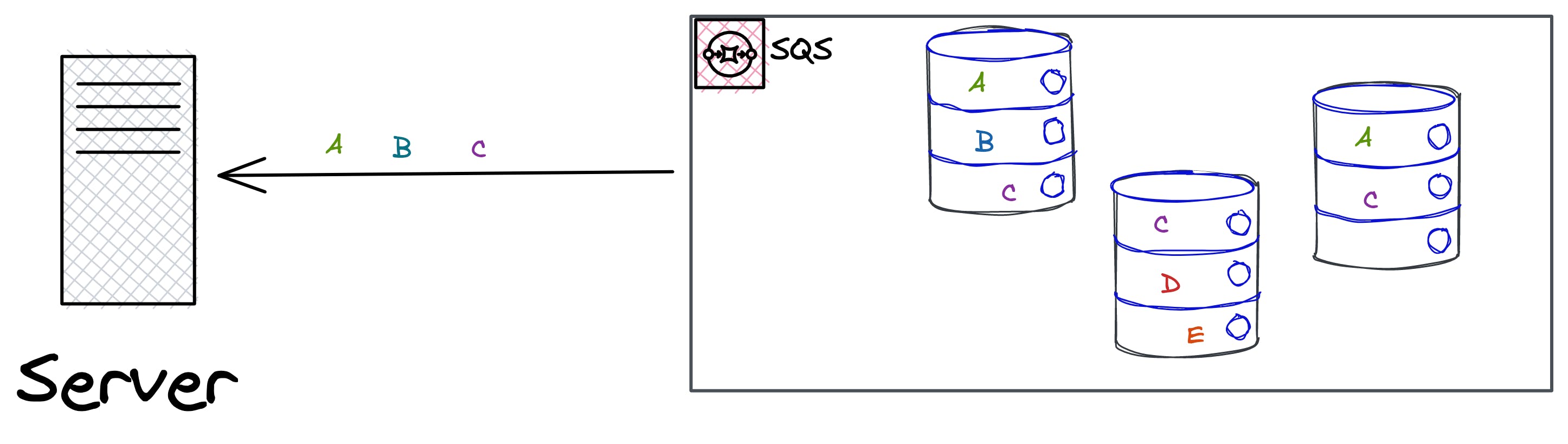

Subsiquentiall requests to the queue in question will return all available messages. The following image shows an example of this:

Your server is short polling on an SQS queue. On the first poll, it will only receive messages from the sample rate of servers including messages A, B and C. Messages D and E will not be returned by this RecvieMessage request.

This means on the one hand that the request is faster and the messages A, B and C can be processed quickly, but on the other hand, your server does need to make an additional RecvieMessage. In the worst case, no message is returned from the request, even though messages are in the queue. This is even more noticeable for queues that only have a few messages.

So, we are making requests, and nothing is returned. Remembering the AWS pay-as-you-go nature, we can conclude that we will pay for something that will not return a result, even though there is something there. Okay, at least in queues with many messages, we will not receive an empty response. Still, we are going to accumulate costs.

Short polling returns responses immediately, even if the polled Amazon SQS queue is empty.

Long polling for an SQS Queue

Long polling on SQS is enabled by setting the wait time for ReceiveMessage to a value greater than 0. You can wait to collect messages from SQS for up to 20 seconds. This helps greatly in reducing empty responses for two cases

No messages available in the queue

Messages available but not included in a response

In general, by setting the wait time, the queue can gather more messages from its highly distributed servers. Thus, reducing the overall ReceiveMessage request send to the queue. This guarantees that the request, unless the connection has a timeout, will contain at least one message from the queue. You can still receive an empty response though, especially if the wait time is configured to a very small value, e.g., 1.

Another benefit is that SQS can query all the servers and thus, false negatives (where messages are available but not within the sample rate) will be reduced.

A major advantage is that a message is still returned as soon as it becomes available.

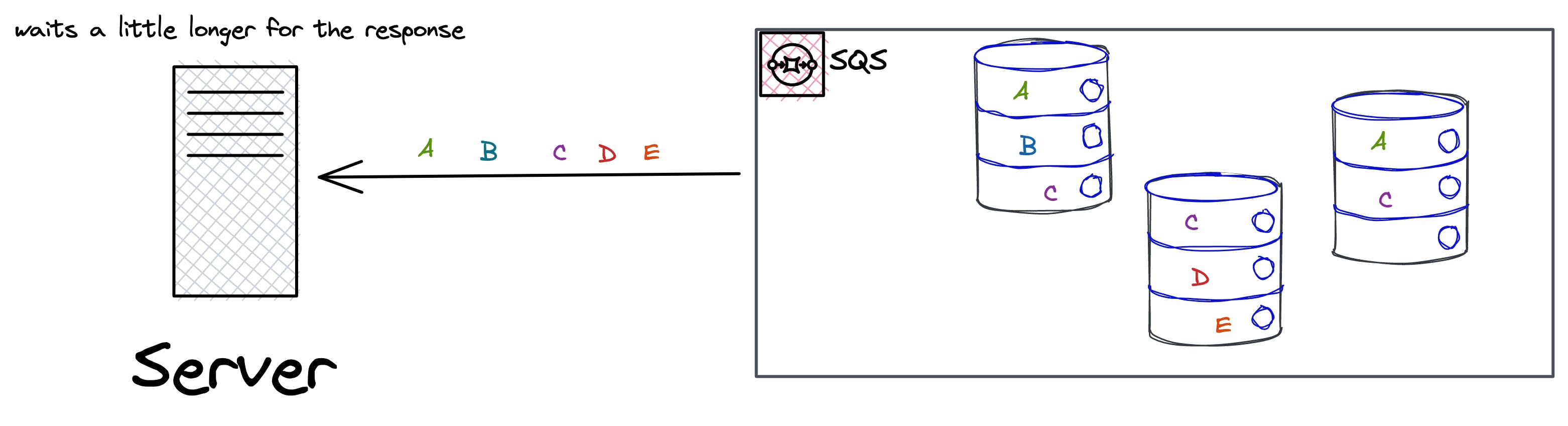

Long polling just waits for a little longer, so that SQS can query all the servers for available messages:

Compare to the short polling, the messages that were not included in the ReceiveMessage are now also available with long polling.

Concluding on long polling, SQS lets use a specific wait time for which the read request blocks until messages come available. When no messages are available, a subsequential ReceiveMessage request is sent to SQS. If there are messages in the queue to consume, the read message request does not block. So the consumer will either wait for a given amount of time until messages come available, which will reduce the request a lot, or unblock, e.g., pull in the newest available message on the queue.

Long polling lets you consume messages from your Amazon SQS queue as soon as they become available.

As an important side node, you need to configure the timeout ReceiveMessage longer than your WaitTimeSeconds (which enabled long polling). Otherwise, you will run into HTTP errors, as your request will timeout before the wait time to gather messages is over.

So, what polling should I use?

Yes, you guessed it already: It depends.

AWS says that long polling is preferable over short polling in most cases.

We can underline this by the above findings. The name of short polling may imply that messages are handled faster, but we have seen that this does not have to be the case. We cannot determine if a message will be included in a short polling request and how many requests are needed so that a specific message is included in the response. Long polling on the other hand will wait and block the consumer until at least one message arrives, or the timeout is reached. For processing messages as soon as they become visible, we should prefer long polling over short polling.

Overall, the most suggestions you will find are to use long polling. Still, short polling has some valid use cases, and we should think about what we are implementing before deciding:

Short polling can be suitable for big queues where we can assume always to have messages available. Another valid point would be in an application where the polling would be executed in the main thread (remember that long polling will block), thus having only small block times until the execution of the thread resumes. If your application uses a single thread to poll multiple queues, long polling may not work as the main thread will be blocked and messages from other queues cannot be polled.

In this case, imagining short polling as a round-robin algorithm may help to visualize the validity of short polling.

Final words

I wanted to offer you, folks, a rough overview of the possibilities with SQS. In the beginning, I was confused about the names and thought short polling would be the best option (Like come on, thinking something is fast is always a bribe). Of course, we can compare the different polling strategies in many more use cases and detail.

As it turned out, preferring long polling over short polling has many advantages, and I paid for this finding with a little bit of unnecessary extra cost on AWS. In the next project I will implement using SQS, each queue will be configured to use long polling.

If you are interested in queues and don't want to deal with all this fine-tuning stuff, check out serverlessq.com. It's a service that lets you create a ready-to-use queue with a couple of clicks. All the heavy lifting is done for you, and after a couple of seconds, you are ready to send a message to your very own queue 🚀